Welcome to The University of Toronto’s “Data-Driven Design” showcase.

Welcome to The University of Toronto’s “Data-Driven Design” showcase.

Here you will find faculty project profiles documenting their areas of inquiry. Each presents an interest/challenge regarding their teaching practice that can be informed by analysis of quantitative data available within their selected course context. Project goals, methods, implementation strategy, and findings are presented.

- Improving student experience and academic success – Brett Beston (UTM – Psychology)

- Integrating experiential learning in a construction management course. – Brenda McCabe (Engineering – Civil and Mineral)

- Adjusting online discussion post length to improve student and faculty workload. – Sandra Merklinger (Nursing)

- Student use of video recordings for learning new material – Libbie Mills (Faculty of Arts and Science – Study of Religion)

- Measuring effectiveness of in-class activities and pre-class video content to enhance student learning. – Franco Taverna (Faculty of Arts and Science – Human Biology)

- Effective instructional practices to facilitate online delivery and ensure online learning readiness of course participants. – Marie-Anne Visoi (Faculty of Arts and Science – French)

Improving student experience and academic success

Brett Beston (UTM – Psychology)

| Inquiry Topic: How can teachers effectively manage and track the progress of many students to improve the student experience and academic success? Failure of a term test could be predictive of RISK of future performance in other assessments to highlight the need for targeted student support and intervention. However, this assessment is retroactive in nature and in many cases, ‘the damage has already been done’. The goal of this inquiry is to make use of Learning Management System (LMS) data to identify how simple measures of student usage can be applied to predict student success PRIOR to assessment. |

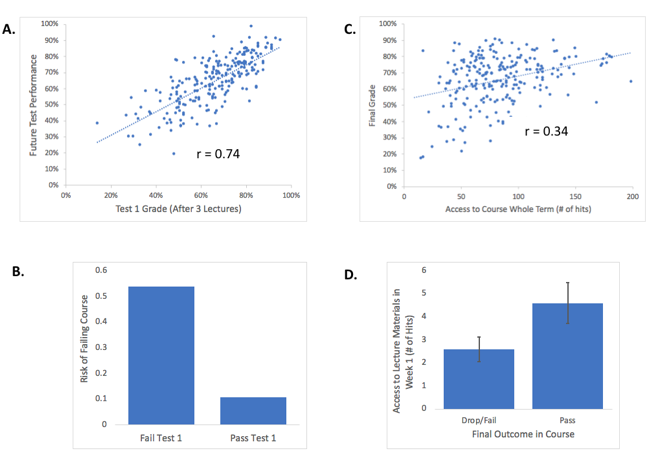

Example Analysis: Not surprisingly, early test performance is well correlated to future test performance (A). Those that ‘fail’ the first test are five times more likely to go on to fail the course than student who ‘pass’ (B). Using LMS hits, there is weaker, but still significant positive correlation between student use of LMS and final course grades(C). Students who pass the course also access the course more often during the first week of school than those who go on to drop/fail course.  |

Inquiry Questions:

|

|

| Methods and Data Sources: Using quantitative analytics available on the LMS to monitor and measure the relationship between student behaviour and risk in Introductory neuroscience course (n=252). Student LMS activity was followed using the ‘statistics tracking’ to count the number of clicks (or ‘hits’) students made within our course page. This approach has been previously used to show a strong relationship between LMS usage patterns and student achievement (Campbell, Finnegan, and Collins, 2006).In this study, ‘student risk’ was broadly defined as any student who does not successfully complete the course, as indicated by a failing grade (<50%), or who drops the course after completing one or more test(s).Course performance was measured through assessing student achievement on two term tests and a final exam. Simple (bivariate) correlations were used to correlated test performance(s) and LMS course ‘hits’, the intention is to identify and information to identify broader patterns within groups of students that are predictive of course outcomes. |

|

| Lessons Learned (Challenges): Clearly, the best predictor of future performance is past performance. Accessing LMS analytics provides limited insights into student activity, yet still may be useful to draw broad patterns of activity that are likely to indicate student ‘risk’. Other qualitative factors are also known to contribute to student achievement (programme enrollment, jobs, volunteer activities, economic status, etc.) and future iterations of risk will use LMS survey tools to capture these metrics.Once risk is assessed, the goal is to implement an intervention to help student become more engaged with the course. I am working in partnership with the RGASC to implement a Peer Facilitated Study Group (FSGs) for students at risk. Using a combination of LMS tracking analytics and demographic surveys, student who are recognized ‘at risk’ will be formally invited to attend FSG sessions. |

|

| Course Design Iteration Plans: Partner with the Robert Gillespie Academic Skills Centre to create a Peer Facilitated Support Group for Psy290 students. Encourage participation in course through a series of incentivized LMS related course activities (including discussion forums, surveys, weekly pre-class quizzes. |

Plans for Sharing: Sharing will take place through CTSI educational events and in partnership with RGASC presentations of the FSG program. |

Integrating experiential learning in a construction management course.

Brenda McCabe (Engineering – Civil and Mineral)

| Inquiry Topic: Integrating experiential learning in a construction management course. Given that few students have construction experience and that this experience is valuable to them without regard to which discipline of civil engineering they choose, it is important to find ways in which they can gain an understanding of the challenges in this industry. Recent changes in occupational health and safety legislation made individual site visit projects with close interaction with site personnel infeasible. An innovative means to safely provide students with site knowledge and experience is needed. |

Example Analysis:

Data collection going forward, analysis may include:

Correlation between sites visit engagement and:

Enthusiasm about the industry

|

Inquiry Questions:

|

|

| Method(s) and Data Sources: To achieve the objectives, data are needed to evaluate both students’ learning experience and the effectiveness of each medium. Learning experience can be based on informal mid-term evaluations and formal course evaluations with special questions included. From our learning management system (LMS), data on the frequency of media access and interaction by the students with the audio files can be collected. Because they will use the site visits to complete a report about construction sites, the quality of course reports can be correlated to download frequency of audio files. This may also be correlated to exam questions about the site visits. |

|

| Lessons Learned (Challenges): Site visits are more effective when mapped to current lecture topics, but this is challenging as the site visits are currently organized by regionally-based walking tours, not project type.Using interviews in the podcasts can help make the materials reusable. They also help students gain respect for industry experts and understand their jobs. |

|

| Course Design Iteration Plans: Combinations of different media will be developed and compared for their effectiveness, student perceptions of value, and effort required to produce the media. Optional media include but are not limited to:

|

Plans for Sharing: Sharing the outcomes of this work could change the way in which construction engineering is taught in schools throughout the country. It could also have a major impact on the perceptions of engineering students to the construction industry. Reaching instructors is best done through presentations and demonstrations at meetings, education conferences, and professional conferences. After the project is fully developed, making the media publically available will be considered. |

Adjusting online discussion post length to improve student and faculty workload.

Sandra Merklinger (Nursing)

| Inquiry Topic: Online graduate courses carry a perceived increase in workload for both faculty and students. In a content-dense online course, students not only have to complete weekly readings and review PowerPoint presentations, they also have to contribute mandatory scholarly posts to the online discussion forum. We sought to determine whether restricting the length of required discussion posts would, a) reduce workload for both students and the instructor, and b) have any negative impact on student midterm exam outcomes. |

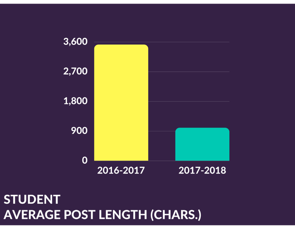

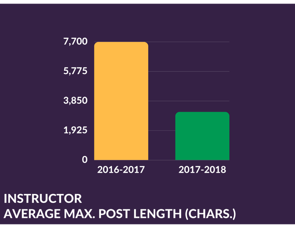

Example Analysis: Student post length, on average, was reduced by 72% when compared to the 2016/2017 cohort (Figure 1). Correspondingly, the average maximum post length was also reduced by 72% in the 2017/2018 student group. Although the instructor was not subject to the same post length restriction, the average and maximum instructor posts lengths were reduced by 30% and 59% respectively in the 2017/2018 academic year (Figure 2). This decrease in post length resulted in a decrease in workload in terms of the time spent reading discussion posts for both students and the instructor. The reduction in discussion post length and therefore, content available for review by the students, did not negatively affect student’s midterm exam outcomes. The class average on the midterm exam for the 2017/2018 students with restricted post length, was 81%. This can be compared to the class average of the 2016/2017, non-limited cohort, was 80%. Figure 1. Average Student Post Length: Average student post length (left) decreased from 3520 characters in the 2016/2017 year, to 999 characters in the 2017/2018 group. Maximum student post length (right) decreased from 9312 characters in the 2016/2017 year to 2620 characters in the 2017/2018 cohort.  Figure 2. Average Instructor Post Length: Average instructor post length (left) decreased from 666 characters in the 2016/2017 year, to 465 characters in 2017/2018. Maximum instructor post length (right) decreased from 7685 characters in the 2016/2017 year to 3133 characters in 2017/2018. Figure 2. Average Instructor Post Length: Average instructor post length (left) decreased from 666 characters in the 2016/2017 year, to 465 characters in 2017/2018. Maximum instructor post length (right) decreased from 7685 characters in the 2016/2017 year to 3133 characters in 2017/2018.  |

Inquiry Questions:

|

|

| Method(s) and Data Sources: Two sections of the same course taught in back to back years, 2016/2017 and 2017/2018, were used to explore our inquiry questions. Students in the first year, 2016/2017, had no restrictions placed on them in terms of discussion post length. Student in the second year, 2017/2018, were asked to maintain their posts at a maximum of 10 lines; these students received two to three reminders about post length throughout the term. Course data from the first term of each course was exported from the Blackboard Learning Management System with a focus on the User Forum Data accessible through the Performance Dashboard. The Data was then compared using average and maximum post length across both sections. |

|

| Lessons Learned (Challenges): We can infer, to a cautious degree given our sample size (n=14 per group), that limiting post length reduces student and instructor workload and that this condensed approach to the online discussion forum does not negatively impact student outcomes. |

|

| Course Design Iteration Plans: We did not include the number of attachments made by students in their posts to our analysis, nor did we put any limits on the length or number of attachments students could make. This is something we would examine in future as an additional means to reduce workload.We would also like to study student’s perceived workload by creating a questionnaire to be completed at the end of the course. Moving forward, we would recommend that limiting student and instructor post length to a maximum of 10 lines is not only feasible, but more importantly, it has no negative impact on student outcomes. As a result, we would like to examine further methods by which we can modify the discussion activity and limit the length of individual posts. |

Plans for Sharing: The findings from this project will be presented at the D3 Showcase Event at CTSI and will also be presented to Faculty at the Lawrence S. Bloomberg Faculty of Nursing. |

Student use of video recordings for learning new material

Libbie Mills (Faculty of Arts and Science – Study of Religion)

| Inquiry Topic: The inquiry is centred on a beginner Sanskrit course, run in two parallel sections: one online and the other oncampus. The course is fast-paced. Each week new material is introduced, and students must complete exercises to understand how that new material works, and how it fits into the system as a whole.The weekly exercises can be intimidating. The instructor sought to develop a learning tool, suitable for both online and oncampus learning, that would make them more approachable.The tool chosen was a set of video recordings in which a student, named Yumi, tackles a sample exercise for each set of weekly exercises. Students are advised to watch the recordings ahead of approaching the exercises themselves.The study assesses student usage of the video recordings. |

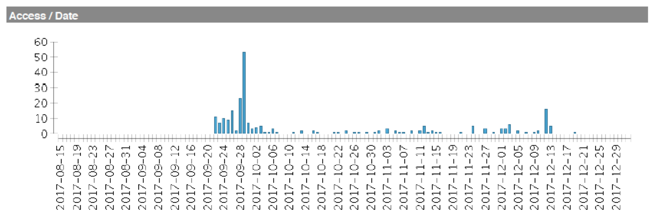

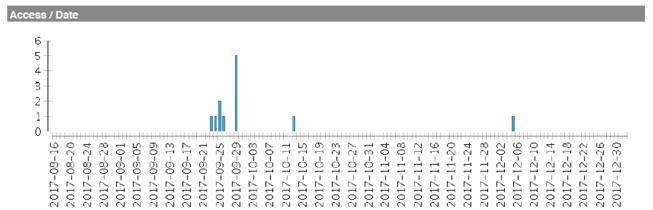

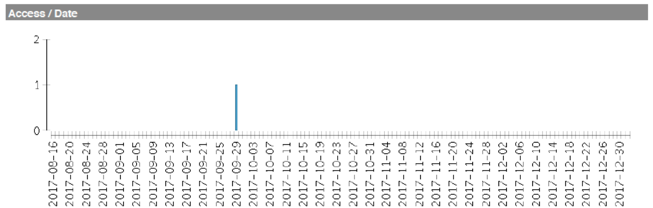

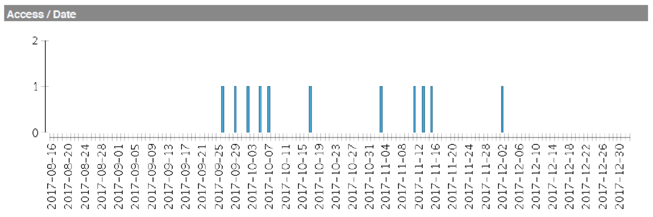

Example Analysis: Example Analysis: The graphs show usage of a single video, made available to students 2017-09-22 in preparation for class 2017-09-29.Group usage of the video:  Three examples of individual usage of the video: Three examples of individual usage of the video:   |

Inquiry Questions:

|

|

| Method(s) and Data Sources: In 2015-16 Yumi was hired, using U of T online course development funds, to learn Sanskrit and be video-ed each week as she completed a sample from the weekly exercises.The videos were processed through Mymedia, and posted for use on the Blackboard LMS.Usage data were collected from both MyMedia and Blackboard.The MyMedia data proved to be less useful, because Google analytics did not permit them to be individualised to the student, out of privacy concern.But the Blackboard data were useful:For RLG 260, Fall 2017, for each section, August 15, 2017 to January 01, 2018, four types of data were assessed:1 An “overall summary of user activity”, and “course activity overview”, to see how the group used the LMS for the course.2 “Single student activity overview” for each student that completed the course and did not cross sections, to see how those individual students used the LMS for the course.3 “Content usage statistics” tracking for each individual Yumi video, to see how the group used each video.4 For a single video (G3 Yumi does external vowel sandhi ex3A1) “content usage statistics” tracking for each student that completed the course and did not cross sections, to see how those individual students used that particular video.The data were compared with final grades. |

|

| Lessons Learned (Challenges): The limited value of information from course evaluations and voluntary surveys. The formative data question bank in the course evaluations did not permit me freedom to ask the questions I needed. I set up a voluntary survey of students who went on to take RLG 263. Nobody completed it.- MyMedia data were less useful, because Google analytics did not permit them to be individualised to the student, out of privacy issues.- The 180 day window for Blackboard LMS analytics is tight. Blackboard LMS analytics sometimes stalled. But the Blackboard LMS tools for arranging data were useful.- The videos are well used. I am glad to see that.- Usage does not correspond to success in terms of final grade in any grand manner.- Usage did not differ between the two sections (online and on campus). |

|

| Course Design Iteration Plans: Having seen that the students readily use the videos, I will stop feeling the need to remind them to do it.- More videos are being made, using a male student, to add variety of voice, thinking style, and gender. |

Plans for Sharing: Project will be on open.utoronto.ca for viewing and shared at CTSI programming. |

Measuring effectiveness of in-class activities and pre-class video content to enhance student learning.

Franco Taverna (Faculty of Arts and Science – Human Biology)

| Inquiry Topic: (Co-investigators: Franco Taverna, Michelle French, Melody Neumann)A greater understanding of methods to promote student learning has prompted educators to reassess their traditional lecturing approach. We (Franco Taverna, Michelle French, Melody Neumann) are developing an interactive model for teaching large classes. Specifically, video case studies, developed with a storytelling arc that spans three disciplines in biology, will be used in three foundational courses (yearly enrollment: 3500). Students will first view videos, then will work in small groups in class to answer questions that emphasize key concepts and their real-world application. To overcome the challenge of small group work in large classes, we will use a dynamic grouping and immediate feedback assessment tool called Team Up! (developed by Dr. Neumann). The tool allows students to form groups and then work together to reach a consensus answer, thereby actively encouraging student communication and peer-teaching. |

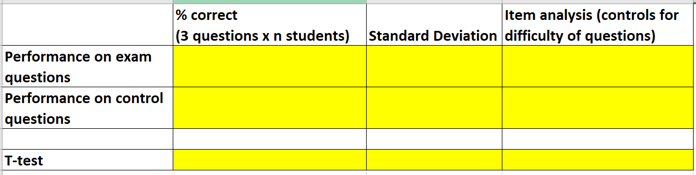

Example Analysis: The study will be conducted in the 2018-20 academic years.Example analysis of performance: (paired t-test will be used)  Example survey questions Example survey questions(Likert scale 1 Strongly Disagree, 2 Somewhat Disagree, 3 Neither Agree nor disagree, 4 Somewhat Agree, 5 Strongly Agree)

|

| Inquiry Questions:

Our goal is to measure perceived and objective effectiveness of the in-class activities and pre-class video content to enhance student learning. Perceived effectiveness will be assessed by student and instructor surveys. Objective effectiveness will be measured by comparing student performance on term and exam questions related to the active learning component to overall test scores and to performance on content from a “control” lecture delivered in the traditional way without in-class activities. |

|

| Method(s) and Data Sources: See example analysis. The data sources include exam scores and attendance from TeamUP! group activity tool which is automatically recorded in the LMS. |

|

| Lessons Learned (Challenges): A major lesson learned in the project was the challenges in assessing performance in such a quasi-scientific design. Given that a randomly assigned, control-group type of design is not possible within our large introductory courses, there are some limitations of interpretation of the data. For example, when comparing performance of the same student across two sets of exam questions, it may be difficult to control for confounds such as relative difficulty of the questions. |

|

| Course Design Iteration Plans: Should the data show that the in-class activity-based teaching results in better objective and perceived learning, more of that type of teaching activity would be designed and incorporated. |

Plans for Sharing: We plan on sharing our project results locally at conferences hosted by CTSI and nationally and internationally at teaching conferences. In addition, we plan to publish in teaching/learning journals. |

Effective instructional practices to facilitate online delivery and ensure online learning readiness of course participants.

Marie-Anne Visoi (Faculty of Arts and Science – French)

| Inquiry Topic: The present project explores aspects of social and teaching presence in the online French Cultural Studies Course FCS292H1S. Instead of relying solely on online lecture notes and texts, the course uses technology to provide the socio-historical and cultural background necessary for understanding challenging course concepts. More specifically, the project looks at the relationship between the instructor’s online presence and student engagement with the course material and online participation in the forum sessions. The study investigates the following aspects of asynchronous communication:

|

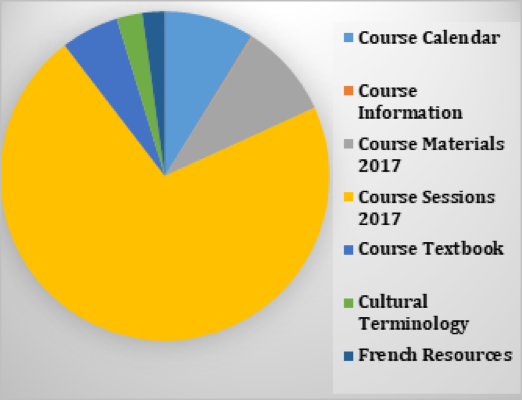

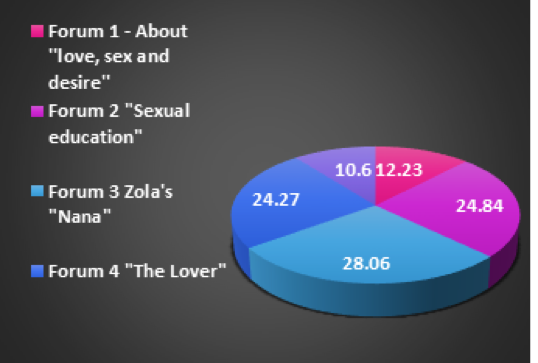

Example Analysis: Example I – All User Activity Inside Content Area  The first example illustrates all user activity inside content area: course sessions consisting of instructor videos, embedded powerpoint presentations and enhanced media course materials. By providing socio-historical and cultural background information through photos, video clips and text-based documents, the course instructor can enrich the content of each course session and thus provide ample opportunities for students to familiarize themselves with the great figures and events which shaped the history of France before reading the assigned texts or watching the films. This type of insight is a key factor in the transmission of content expertise. The sequencing of course materials and the incorporation of reflection questions at the end of each course session cam motivate students to communicate with the instructor and with their peers on topics they understand.Example II User Activity in Forum Sessions The first example illustrates all user activity inside content area: course sessions consisting of instructor videos, embedded powerpoint presentations and enhanced media course materials. By providing socio-historical and cultural background information through photos, video clips and text-based documents, the course instructor can enrich the content of each course session and thus provide ample opportunities for students to familiarize themselves with the great figures and events which shaped the history of France before reading the assigned texts or watching the films. This type of insight is a key factor in the transmission of content expertise. The sequencing of course materials and the incorporation of reflection questions at the end of each course session cam motivate students to communicate with the instructor and with their peers on topics they understand.Example II User Activity in Forum Sessions The second example shows that the instructions regarding the Discussion Board participation included in the syllabus and the regular feedback on online collaboration provided through announcements, individual and group feedback can motivate students to interact with their instructor and their peers.By establishing the practice of reflecting on the concepts studied after each session and then posting reflections and responses to others’ comments in thematic forum sessions, the instructor can clarify concepts, incorporate text or film-based feedback and redirect learning.As the exam II illustrates, students need to familiarize themselves with specific content before starting to contribute to the Discussion Board. This can explain the reduced amount of activity in the first forum. As the instructor continues to provide feedback on student responses and uses examples to clarify content, students can become more engaged in online learning as can be seen from the increased participation in the last three forum sessions. The final review forum can help students prepare for their final test by allowing them to ask specific questions related to the materials discussed in the course, provide suggestions for future course design and collaboration. The second example shows that the instructions regarding the Discussion Board participation included in the syllabus and the regular feedback on online collaboration provided through announcements, individual and group feedback can motivate students to interact with their instructor and their peers.By establishing the practice of reflecting on the concepts studied after each session and then posting reflections and responses to others’ comments in thematic forum sessions, the instructor can clarify concepts, incorporate text or film-based feedback and redirect learning.As the exam II illustrates, students need to familiarize themselves with specific content before starting to contribute to the Discussion Board. This can explain the reduced amount of activity in the first forum. As the instructor continues to provide feedback on student responses and uses examples to clarify content, students can become more engaged in online learning as can be seen from the increased participation in the last three forum sessions. The final review forum can help students prepare for their final test by allowing them to ask specific questions related to the materials discussed in the course, provide suggestions for future course design and collaboration. |

| Inquiry Questions:

Based on the belief that learning about French culture will provide opportunities for enhanced social and intercultural understanding, the interdisciplinary format of the course provides the foundation for an in-depth study of effective instructional practices used to facilitate online content delivery and ensure online learning readiness of course participants. The following inquiry questions will help determine important aspects of the instructor’s role in engaging students in the course content through asynchronous communication:

|

|

| Method(s) and Data Sources: A combination of methods and data sources were used to determine the effect of the social and teaching presence of the course instructor on student learning and online participation in the course.

Data obtained from all these sources was validated through close observation and analysis of students’ online participation and the quality of their written contributions, comments from the final review session and direct feedback from students. |

|

| Lessons Learned (Challenges): The study identified several effective instructional practices that can be used to engage students in their learning through asynchronous communication:

|

|

Course Design Iteration Plans:

By asking students to write entries regularly in thenline forum sessions, the instructor can promote collaboration. Specific instructions regarding the length of written comments, language correctness and accuracy and the content to be discussed included in the course syllabus will help students formulate appropriate questions and comments. The qualitative feedback provided to students participating in the forum discussions will target key concepts presented in the course and help students clarify concepts learned, review materials or reflect on the topics presented in the instructor videos. |

Plans for Sharing:

International Conference Presentations |